I Tested Krea.ai, Midjourney, and Visual Electric So You Don't Have To

What I learned after three months of real usage

Summary: After struggling with generic stock photos for a client project, this designer spent three months testing Krea.ai, Midjourney, and Visual Electric to find which AI image generation tools actually work for professional design. Krea.ai excels at custom training and real-time generation, Midjourney produces beautiful but obviously AI-generated images, and Visual Electric offers the best workflow integration for designers. The biggest takeaway: these tools replace old creative constraints (budget, availability) with new ones (algorithmic aesthetics, platform limitations) that designers need to understand and work within.

Earlier this summer, I was creating a presentation slide deck for a consulting project, scrolling through my 47th page of stock photos trying to find imagery that matched the client's brand tone. They had a sophisticated, minimalist aesthetic, think clean lines, muted colors, premium feel but every search returned the same sterile corporate imagery.

"Modern minimalist" gave me stark white backgrounds with floating objects that felt cold rather than premium. "Sophisticated business" delivered more of those forced handshakes and people pointing at charts. I tried "luxury minimal" and got marble surfaces with gold accents that screamed high-end retail, not the thoughtful professionalism my client embodied.

I needed imagery that felt authentic to their brand, but everything looked like it came from the same generic playbook. After two hours of searching, I was no closer to finding anything that fit.

This wasn't the first time I'd hit this wall. As someone who's spent years crafting visual experiences, I've felt this tension between having a clear vision and the constraints of available imagery. Stock photos rarely capture the exact mood or aesthetic I'm after. Custom photography isn't always in the budget or timeline.

I've played with various AI image generation tools in the past, but nothing seriously. That's when I decided it was time to evaluate AI image generation tools for my design practice.

The AI image generation space is crowded and confusing, so I wanted to be methodical about this.

The Testing Goal

Rather than getting swept up in feature comparisons or hype, I wanted to understand how these tools would actually work in practice.

The challenge with AI image generation tools is that they all sound impressive on paper. Every platform promises high-quality results, easy interfaces, and revolutionary capabilities. But the real question isn't what they can do in theory, it's how they perform when you're solving real creative problems.

I wanted to know which tools would genuinely improve my work; make it better, faster, or more creative. Which ones would I reach for under deadline pressure? Which interfaces felt intuitive versus frustrating? And which tools produced results professional enough to show clients?

I spent the better part of three months testing Krea.ai, Midjourney, and Visual Electric to see which ones actually delivered on their promises.

Krea.ai

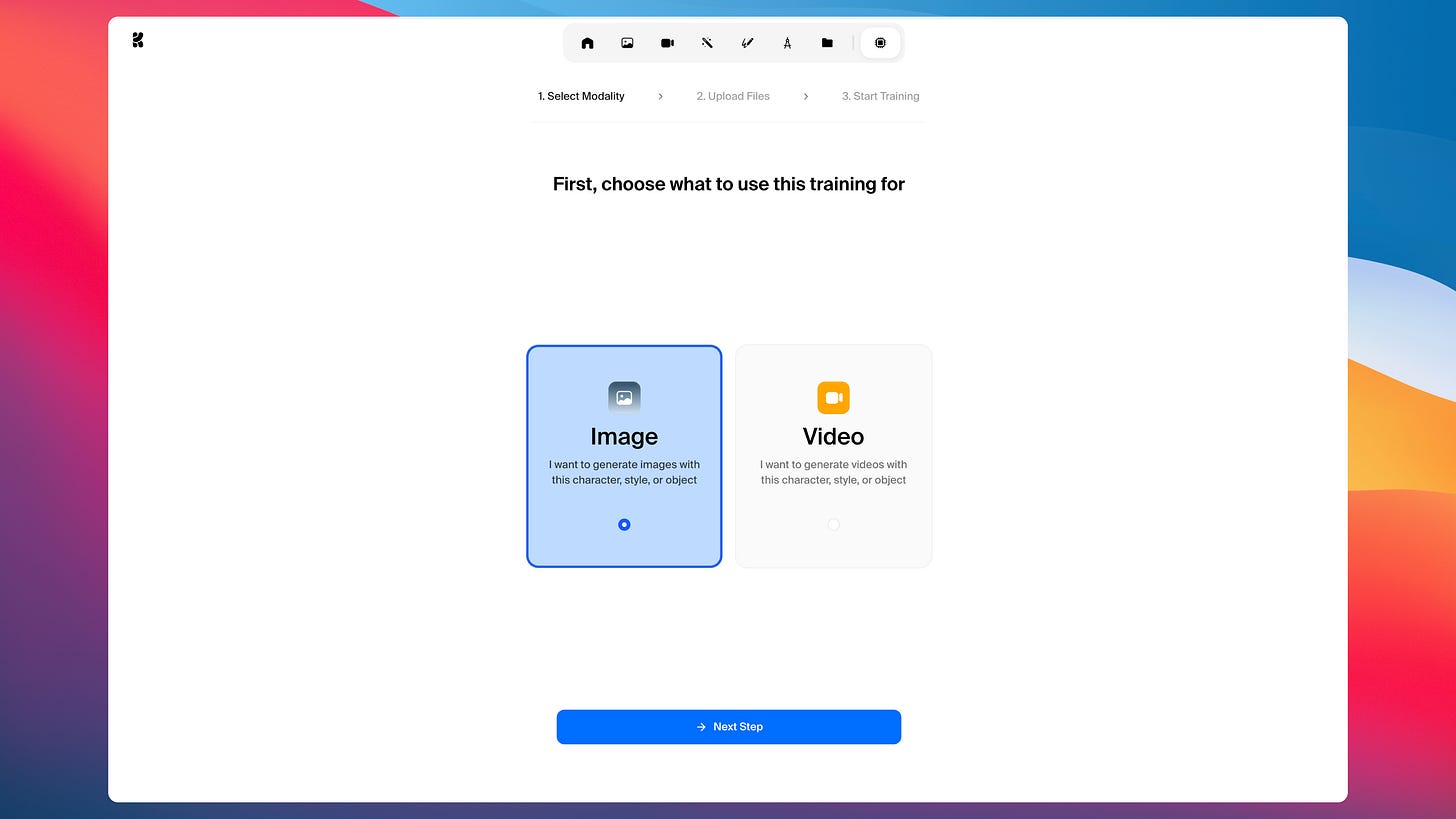

When I first opened Krea.ai, the training capabilities immediately caught my attention.

Krea.ai allows you to train the AI on your own images and style, teaching it your specific character, aesthetic or brand look. You upload a set of images and the platform learns from them, then generates new images matching that particular style. It's the kind of customization that could solve the brand-specific imagery problem I was facing.

Beyond the training capabilities, Krea.ai positions itself as a comprehensive visual creation tool. It offers real-time generation, video creation, image enhancement, and access to various diffusion models including FLUX, Stable Diffusion, and many more, including their own proprietary model.

The first time I opened Krea.ai, it took some time to figure out how to access and use the training feature, but once I found it, the process was straightforward. Actually getting comfortable with the platform took maybe five minutes—it's intuitive once you know where everything is.

Pros:

Real-time generation that eliminates waiting between iterations

Comprehensive toolkit beyond just image generation

Interface designed for creative workflow, not just prompting

Perfect for exploring wild ideas without commitment: you can test concepts that would never make it past a traditional creative brief

Excellent for client presentations where you need live adjustments

Community-driven styles and Krea styles are high quality and give you more control

Standard image and video file formats with multiple resolution options

Upscaling and image enhancement capabilities

Cons:

Technical limitations in advanced features

Resolution options feel limiting, though this is common across all these tools

What impressed me most was how this real-time approach changed my creative process. Instead of the typical prompt-wait-evaluate-restart cycle, I could make adjustments and see immediate results. For a designer, this feels more like actual designing than prompting.

For design work, Krea.ai excels when you need to explore concepts rapidly or when working with clients who want to see live adjustments during presentations. It's particularly valuable in the early conceptual phase when you're not yet committed to a specific visual direction.

Midjourney

While Krea.ai impressed me with its flexibility, Midjourney presented an entirely different challenge.

Midjourney's biggest issue, in my experience, is that you can spot its output from a mile away. The platform produces high-quality, technically impressive images, but they all have that unmistakable AI-generated look. When I'm trying to create imagery that feels natural and authentic, that's exactly what I'm trying to avoid.

Recently, Midjourney added video generation capabilities, allowing you to animate existing images into 5-second video sequences. While limited, this opens interesting possibilities for bringing static designs to life.

Midjourney's community aspect means you can see everyone else's prompts and results, which is educational but also means your work is public unless you pay for a higher tier.

Pros:

Strong community learning, see what others are creating

I guess version 7 personalization learns your preferences after rating ~200 images. I haven’t done that.

New video generation turns static images into 5-second sequences

Cons:

Everything has that recognizable Midjourney aesthetic that screams "AI-generated"

Limited control over precise brand-specific aesthetics, although you can create moodboards to control style.

Potential legal implications due to training data concerns

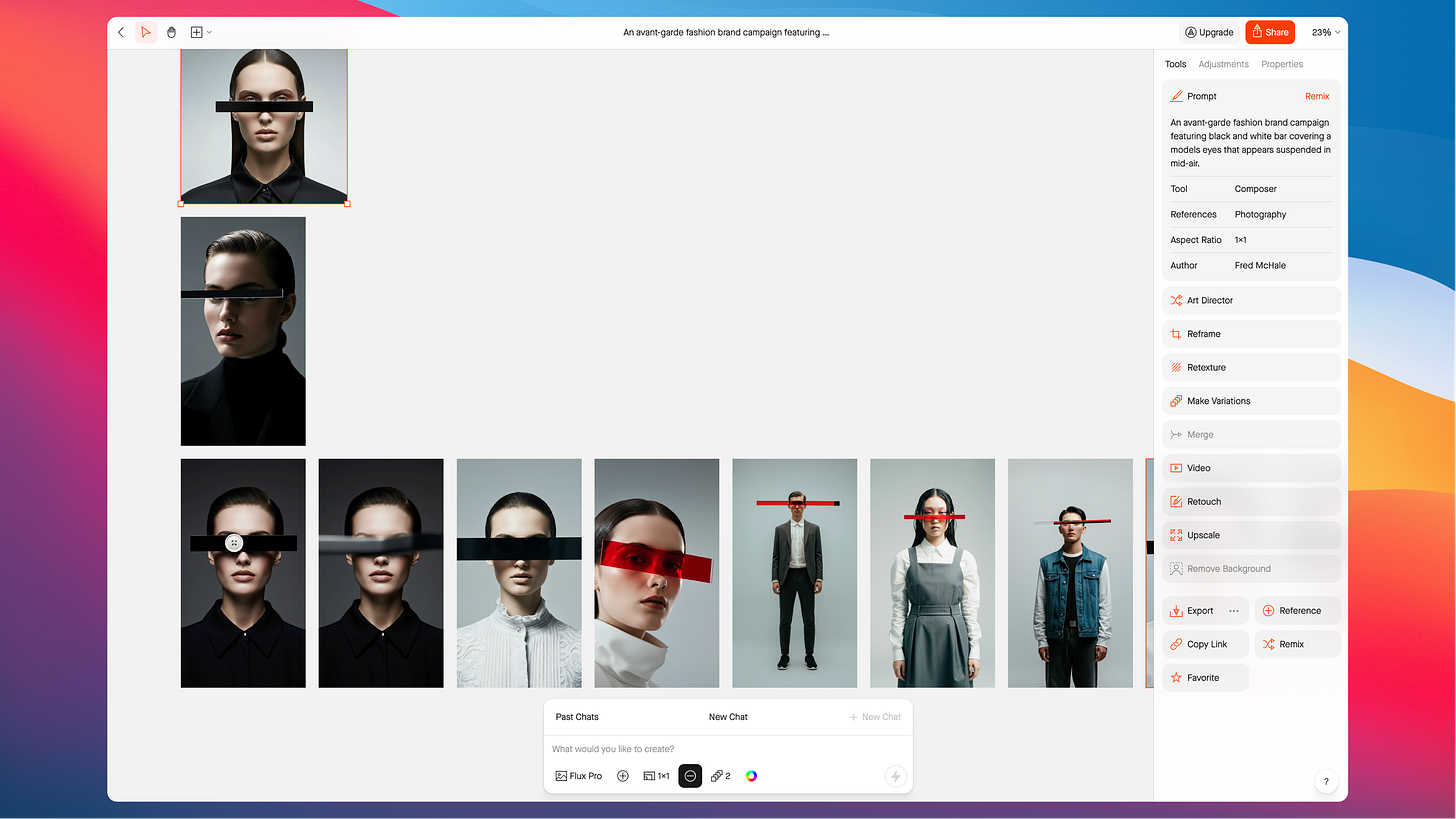

Visual Electric: Built Different

Visual Electric was created specifically with designers in mind, and this focus shows throughout the platform. Rather than treating image generation as a standalone activity, it integrates into existing design workflows. The most significant advantage is its integration with other design tools.

Pros:

Preset styles organized with design terminology that makes sense

Generative canvas approach feels like designing, not just generating

Real-time collaboration for teams working on same canvas

"Art Director" feature refines prompts in design language

Cons:

I ran across more bugs than the other two tools. There were times when I could not generate anything even though I had tons of “volts” and had to wait until the next day.

The platform offers preset styles, but more importantly, these styles are organized and named in ways that make sense to designers. Instead of abstract model names, you get categories like "Editorial Photography," "Product Mockups," and "Brand Identity" that map to actual design needs.

What sets Visual Electric apart is the generative canvas approach. Instead of generating isolated images, you work on an infinite canvas where you can layer, combine, and iterate on generated elements. This feels more like designing than generating.

The collaboration features allow team members to work together in real-time on the same canvas, addressing a real workflow challenge when multiple people need input on visual direction.

I hit the Visual Electric free tier limit within my first week of testing, which made it clear the free tier has constraints for intensive use.

In my design practice, Visual Electric works best when you're working within established design tools and need AI generation that integrates into your workflow.

What I Actually Use

After three months of testing, here's how these tools have fit into my design practice and creative workflow.

For presentations, fun creative endeavors, and new assets, and rapid ideation, I find myself reaching for Krea.ai. The real-time feedback makes it excellent for exploring concepts when I'm not sure what direction to take, and the training capabilities help me get closer to what I want faster.

When I need to edit images dramatically or for production design work that needs to integrate with existing projects, Visual Electric has become indispensable. There is as lot to be said about its interface and how approachable it is to those with design tool experience.

From a designer's perspective, no single tool handles every use case perfectly. But these two combined, they've fundamentally changed how I approach visual content creation, both professionally and personally.

Wrapping Up

These three months of testing have shown me something important: AI image generation isn't just about replacing stock photos. It's about expanding what's possible when you have an idea and want to see it visualized.

But what's become equally clear is that these tools come with their own creative constraints. Midjourney locks you into that distinctive aesthetic—even when you're trying to create something subtle for a conservative client. Krea.ai's training capabilities are powerful, but only if you already have imagery that represents your desired direction. Visual Electric's style categories, while organized well, are few.

We've traded the old constraints; budget, availability, technical skill, for new ones rooted in algorithmic biases and platform limitations. The question isn't whether these constraints are better or worse, but whether we're conscious of them. The best creative work has emerged from understanding and working within constraints. The difference now is we're creating within constraints we didn't choose and might not recognize.

We need to be aware of these new constraints. That awareness changes how I approach these tools. Instead of expecting them to solve all my visual problems, I work with their specific strengths and limitations, just like I would with any other creative medium.

If you're considering trying these tools, here's how to decide where to start:

Try Krea.ai if: You want to experiment with training custom styles, need real-time generation, want access to many many AI image models, or want a comprehensive toolkit in one platform. It's especially good if you have existing brand imagery to train from or work with clients who want to see live iterations.

Try Midjourney if: You're okay with a distinctive AI aesthetic, want to learn from a strong community, or need high-quality imagery where the "AI look" isn't a concern.

Try Visual Electric if: You work primarily in Figma or other design tools, need preset styles that match design terminology, or want clean integration with your workflow. Ideal if you're already comfortable with canvas-based design tools and want AI generation that fits into existing processes.